Research Focus

The Machine Learning Department and its members conduct a wide array of research involving the development and application of artificial intelligence (AI), machine and deep learning (ML/DL) algorithms to translational cancer research and discovery that include quantitative image analysis (radiomics), digital pathology (pathomics), cancer diagnosis and prognosis, information retrieval and data integration, outcomes and behavioral sciences, molecular and computational biology. This is in addition to basic ML/DL research in pertinent areas to oncology such as visual analytics and explainable AI, automated ML/DL, and information-theoretic approaches.

Research projects include:

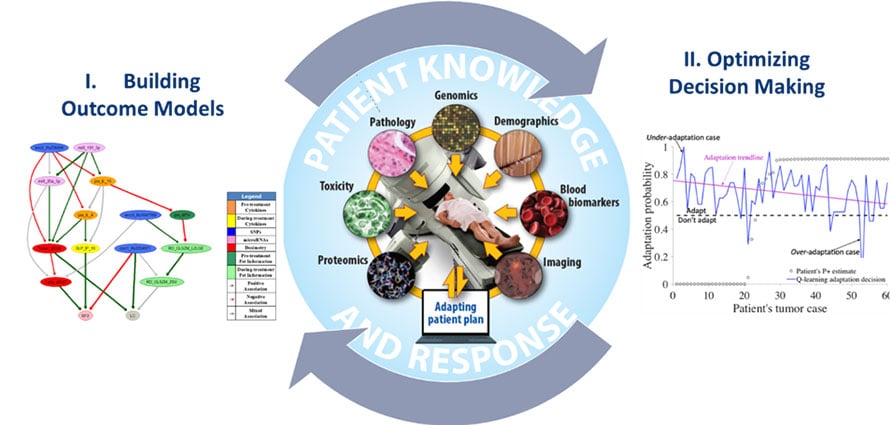

Optimal Decision Making in Radiotherapy Using Panomics Analytics

Funding resource: NIH/NCI R01 CA233487

The long-term goal of this project is to overcome barriers related to prediction uncertainties and human-computer interactions, which are currently limiting the ability to make personalized clinical decisions for real-time response-based adaptation in radiotherapy from available data.

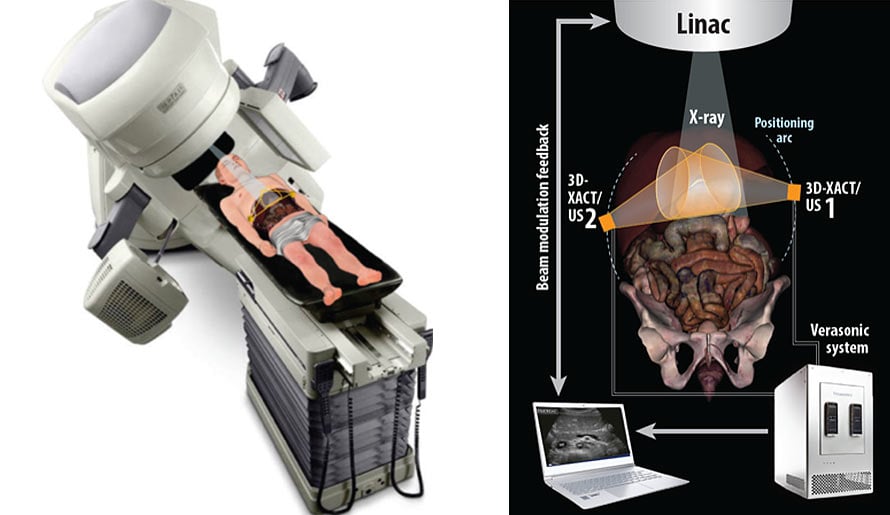

Combined Radiation Acoustics and Ultrasound Imaging for Real-Time Guidance in Radiotherapy

Funding resource: NIH/NCI R37 CA222215

To develop an evaluated and integrated tomographic feedback system that uses X-ray acoustics (XACT) and advanced ultrasound (US) images to monitor a patient’s present status during radiotherapy delivery.

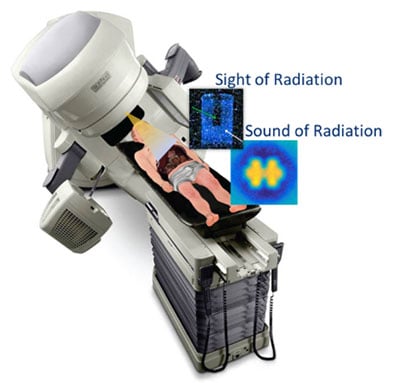

Cerenkov Multi-Spectral Imaging (CMSI) for Adaptation and Real-Time Imaging in Radiotherapy

Funding resource: NIH/NCI R41 CA243722

Sponsor: Endectra LLC

Sponsor: Endectra LLC

In this STTR proposal, Endectra will work with oncology researchers at Moffitt to develop and evaluate a novel Cerenkov Multi-Spectral Imaging (CMSI) technique using new solid-state on-body probes to conduct routine optical measurements of radiation dose and molecular imaging during cancer radiotherapy delivery. This approach is expected to provide more accurate tumor physiological representation and dose adaptation during treatment, reduce overall patient exposure to radiation, and allow for ongoing assessment of tumor physiological parameters. If successful, Endectra will develop CMSI as an alternative cost-saving and effective molecular imaging/targeting modality for routine radiotherapy applications, greatly improving radiotherapy outcomes and yielding a major impact on public health.

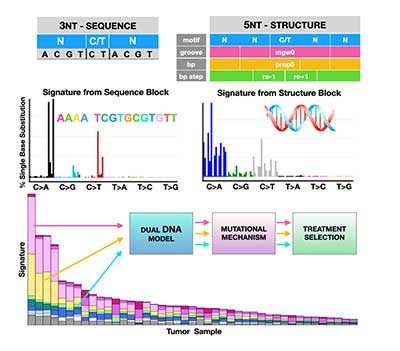

Classification of DNA Structural Mutational Profiles

Detection and classification tools are urgently needed to improve cancer patients’ survival. It has been demonstrated that mutations due to structural DNA aberrations are a better measure of DNA damage. Hence, in this project we aim to use information about DNA conformation to better classify cancer types and to predict therapeutic response. Our goal is to advance cancer classification by developing a computational tool that uses structural (conformational) descriptors of the human genome. The resulting computational model will facilitate future analyses of tumor metabolism, morphology, drug screening, and treatment optimization. Karolak Lab Page

Detection and classification tools are urgently needed to improve cancer patients’ survival. It has been demonstrated that mutations due to structural DNA aberrations are a better measure of DNA damage. Hence, in this project we aim to use information about DNA conformation to better classify cancer types and to predict therapeutic response. Our goal is to advance cancer classification by developing a computational tool that uses structural (conformational) descriptors of the human genome. The resulting computational model will facilitate future analyses of tumor metabolism, morphology, drug screening, and treatment optimization. Karolak Lab Page

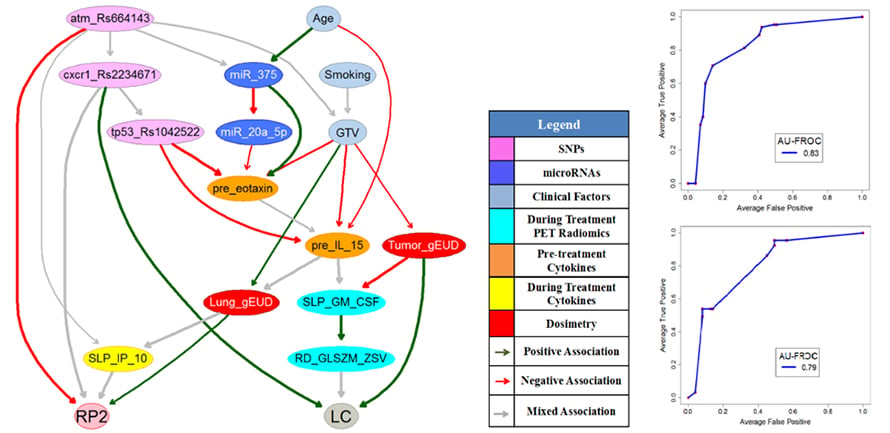

Human-in-the-Loop Adaptive Radiotherapy for Cancer Cure

The objective of adaptive radiotherapy is to change the radiation treatment plan delivered to a patient during a course of radiotherapy based on patients’ responses by balancing a trade-off of obtaining tumor local control (LC) while limiting radiation-induced toxicities (RITs). LC and RITs may depend on radiation dose, the patient’s physical, clinical, biological, imaging, and genomic characteristics before and during the radiotherapy. While general machine Learning approaches have the potential to explore useful patterns over the treatment process from these data, they may not be employed for clinical decision-making until they gain physicians’ trust. Therefore, the purpose of this project is to develop human-in-the-loop adaptive mechanisms by allowing physicians to participate in the processes of integrating diverse multimodal information, predicting radiation treatment outcomes, and identifying the optimal robust treatment plans before and during the radiotherapy.

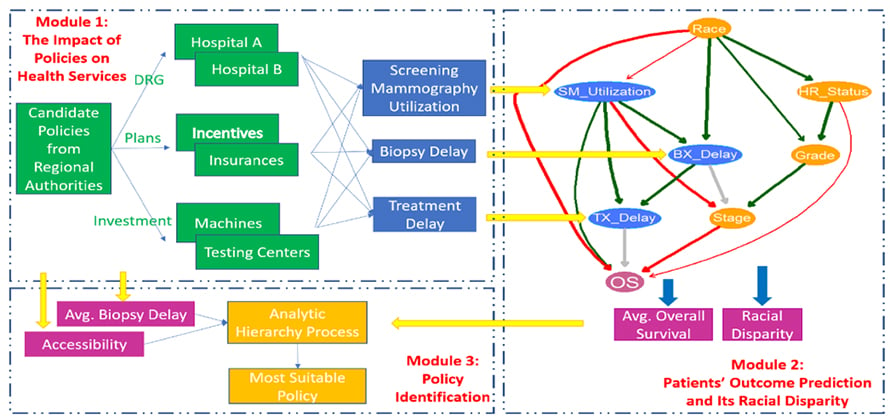

Customized Healthcare Policy to Improve Cancer Patients’ Healthcare Quality for a Specific Area

The healthcare quality of cancer patients not only can be evaluated from public health services such as their accessibility and affordability, screening utilization, adjuvant therapy, the delays of biopsy and treatment, but also depends on their treatment outcomes such as overall survival and their associated racial disparities. The quality of healthcare is not the same for different regions due to their area resources, socioeconomic conditions, etc. Authorities’ policies have the potential to improve patients’ healthcare quality in a region by re-organizing the delivery of the public health services among healthcare providers and patients. However, interactions of all the stakeholders in response to different policies are unknown. Choosing an appropriate strategy for patients’ healthcare quality improvement in the area is still challenging. The purpose of this project is to develop data-driven and model-based approaches to evaluate the impact of potential healthcare policies on cancer patients’ healthcare quality and customize the most suitable policy for the region by balancing the multiple criteria of healthcare quality.

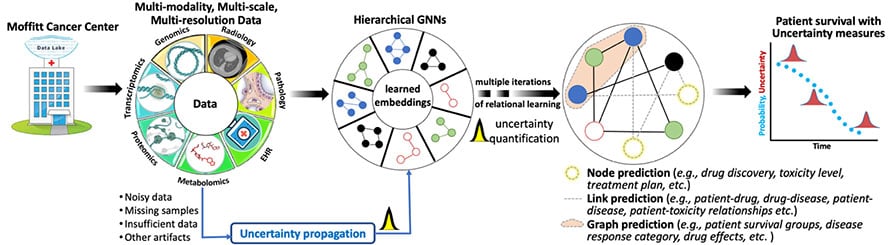

Hierarchical Bayesian Graph Neural Networks for Multi-scale Learning

Technological advancements have led to acquiring a large and heterogeneous pool of multi-scale, multi-modality, multi-resolution data related to cancer diagnosis, prognosis, and treatment planning. Data sets from individual modalities (e.g., radiology, histopathology, genetic, proteomic, and medical history) are traditionally analyzed in isolation to answer various questions. Multi-modal machine learning has the potential to unravel the hidden patterns through convergence and integration across attributes, modalities, and resolutions. However, integration across such diverse and heterogeneous data sets is inherently challenging due to each data set's different scale (both time and space), resolution, and asynchronous data collection methodologies.

We are using Bayesian hierarchical graph neural networks (BHGNNs) to integrate and learn from these multi-scale, heterogeneous data sets. Our proposed models can synergistically learn from various data sets and answer clinically relevant diagnostic and prognostic questions. The figure below shows the schematic layout of our approach.

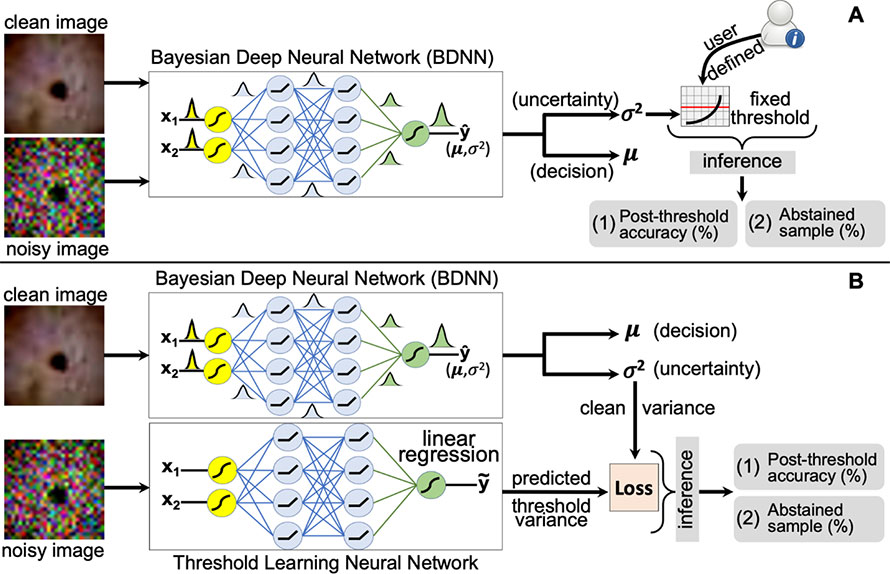

Detection of Failure in Deep Learning of Medical Imaging

Deep neural networks (DNNs) have started to find their role in the modern healthcare system. DNNs are being developed for diagnosis, prognosis, treatment planning, and outcome prediction for various diseases. For widespread adoption of DNNs in modern healthcare, their trustworthiness and reliability are becoming increasingly important. An essential aspect of trustworthiness is detecting the performance degradation and failure of deployed DNNs in medical settings.

The softmax output values produced by DNNs are not a calibrated measure of model confidence and are generally overconfident, with the softmax values being higher than the model accuracy. The model softmax-accuracy gap further increases for wrong predictions and noisy inputs. We employ recently proposed Bayesian deep neural networks (BDNNs) to learn uncertainty in the model parameters. These models simultaneously output the predictions and a measure of confidence in the predictions. BDNNs learn the predictive confidence, which is well calibrated as seen under various noisy conditions.

We use these reliable confidence values for monitoring performance degradation and failure detection in DNNs. We have proposed two failure detection methods. The first method defines a fixed threshold value based on the behavior of the predictive confidence with changing signal-to-noise ratio (SNR) of the test dataset. The second method learns the threshold value with a neural network. These failure detection mechanisms seamlessly abstain from making decisions when the confidence of the BDNN is below the defined threshold and hold the decision for manual review. Resultantly, the model's accuracy based on the confident decisions improves on the unseen test samples. Our work has the potential to improve the trustworthiness of DNNs and enhance user confidence in the model predictions. The figure shows a schematic layout of both approaches.

Patient Monitoring with eXtended Reality and IoT

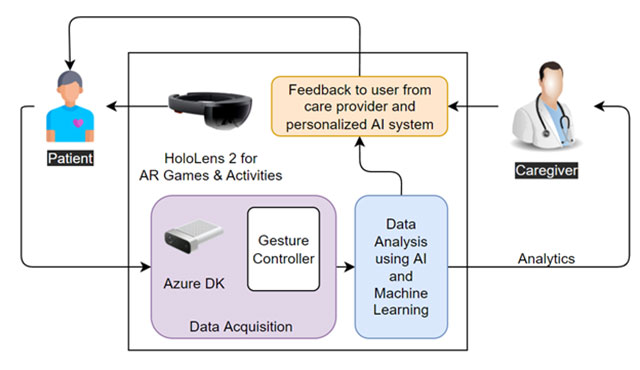

Our project aims to provide an augmented reality (AR) and artificial intelligence (AI)-based intelligent system for monitoring patients’ health, mental and physical, during and after cancer treatment. We refer to AR and AI systems as eXtended Reality. The system is comprised of various AR games and scenarios to engage the patient in targeted activities. The patient’s data is collected, analyzed using AI, and shared with the health care providers.

Healthcare providers use the insights from our AI algorithms to optimize the recovery plan and provide feedback to the patient. The ultimate objective of the study is to personalize the cancer recovery plan and optimize recovery parameters, goals, and activities for each patient’s needs. The proposed system includes AR devices (Microsoft HoloLens 2), Azure Kinect DK, various types of wearable sensors, and in-house AI algorithms. A schematic layout of the system is shown in the figure below.

Read more about these research projects on our team's Lab pages found under the corresponding faculty members here.